Given enough time, will Mark get ruined? (I.e. does his risk of ruin approach 1 as the number of trials approaches infinity?)

My intuition says yes, Mark will eventually be ruined, but I can't prove it.

The paradoxical thing is that the expected number of flips to ruin is infinity, but the probability of it eventually happening is 100%. You would think if it must eventually happen the number of flips would be finite. Just one of many infinity paradoxes.

If Alice has a finite bankroll of m units, then a sequence of m + n losses will certainly wipe out either player. The law of large numbers tells as that they will approach true odds of (1/2)^(m+n), and hence a wipeout. But this proof relies on a finite bound for the bankroll. In the infinite version there's no finite bound to Mark's bankroll because he may be winning coins from Alice. How does the proof work?

I don't know if this forum supports math formatting, but I'll pretend this is a latex editor for now...

Let $n$ be Bob's initial bank roll. Then, we can define the Markov chain that represents Bob's bankroll over time. Our initial state is $0$, there is an absorbing state at $-n$ representing ruin for Bob, and positive states infinitely off to the right.

Let $P_i$ denote the probability that starting at state $(i)n$, we hit state $-n$ before we hit state $(i+1)n$. So, $P_0$ is the probability that Bob busts before reaching state $n$. By symmetry, we know that $P_0 = 1/2$. Since the state $-n$ is absorbing, we know that we lose half of our probability to ruin. The remaining $1/2$ probability must have hit state $n$. Let this serve as our base case. Assume we know $P_i$. Now, we can consider $P_{i+1}$, the probability that we reach ruin from state $(i+1)n$ before we reach state $(i+2)n$. Again, we can use symmetry to see that $P_{i+1} = 1/2(P_i + (1-P_i)P_{i+1})$, as we reach state $i(n)$ before state $(i+2)n$ half of the time, at which point we either get to ruin or return to state $(i+1)n$. Rearranging terms, we get that $P_{i+1} = P_i/(1+P_i) = 1/(i+1)$.

Now that we know the values of $P_i$, we can sum what goes to the absorbing state. From state $0$, we get contribution $P_0$. The remaining probability goes to state $n$, which gives us contribution $P_1(1-P_0) = 1/6$. For state $(i+1)n$, we get contribution $P_{i+1} \prod_{t=0}^{i} (1-P_i)$. By cancelling terms in this sum, we get $1/(i+1)(i+2)$. So, summing these terms gives us our total contribution to the absorbing state, which is $\sum_{t=0}^i 1/(i+1)(i+2) = (i+1)/(i+2)$, which gives us $1$ in the limit as desired.

I know the above is sketchy, but I hope it gives an idea (and is right... I have a tendency to make errors).

Cheers!

Quote: WizardYes, he will eventually be ruined.

The paradoxical thing is that the expected number of flips to ruin is infinity, but the probability of it eventually happening is 100%. You would think if it must eventually happen the number of flips would be finite. Just one of many infinity paradoxes.

Does this also apply if the game is unfair? Let's say that Mark gets paid 6:5 when he wins but only pays Alice even money when she wins. Won't he eventually get ruined by Alice's infinite bankroll anyway? It seems like it should take longer than in a fair game, but wouldn't we be talking about infinity (fair game) compared to infinity times x (Mark's edge), which are equivalent since (infinity)(anything)=infinity?

Quote:Does this also apply if the game is unfair? Let's say that Mark gets paid 6:5 when he wins but only pays Alice even money when she wins. Won't he eventually get ruined by Alice's infinite bankroll anyway? It seems like it should take longer than in a fair game, but wouldn't we be talking about infinity (fair game) compared to infinity times x (Mark's edge), which are equivalent since (infinity)(anything)=infinity?

Given that he has an advantage, he's very much like a casino, since they only have finite bankrolls. I would think that Mark's RoR approaches but never reaches 0, just as casinos have a small, but > 0 RoR, which goes down as they make more money playing.

Quote:How could infinite get ruined in the first place? It's never ending.

As the problem states, Alice cannot get ruined as she has infinite units. The only question is if Mark will necessarily be ruined if he keeps playing.

Quote: JoeshlabotnikDoes this also apply if the game is unfair? Let's say that Mark gets paid 6:5 when he wins but only pays Alice even money when she wins. Won't he eventually get ruined by Alice's infinite bankroll anyway? It seems like it should take longer than in a fair game, but wouldn't we be talking about infinity (fair game) compared to infinity times x (Mark's edge), which are equivalent since (infinity)(anything)=infinity?

Nope, his winnings will drift towards the right infinitely, with some fixed probability of ruin (mostly in from the early steps of the process). Think of it like any positive expectation game... Your probability of ruin decreases as bankroll increases, so after sufficient time, this is negligible.

Just because infinity is involved doesn't mean it trumps everything. For instance, the probability of ruin could be geometrically decreasing in the number of rounds as Bob's bankroll increases in a +EV game, so, even when summed over infinite rounds the total risk of ruin is finite. If we were to do the calculation above for this sort of scenario, instead of the sum of contribution to the absorbing state tending to $1$, it would tend towards some small finite probability.

Quote: lllI'm not sure how to prove this tho.

If Alice has a finite bankroll of m units, then a sequence of m + n losses will certainly wipe out either player. The law of large numbers tells as that they will approach true odds of (1/2)^(m+n), and hence a wipeout. But this proof relies on a finite bound for the bankroll. In the infinite version there's no finite bound to Mark's bankroll because he may be winning coins from Alice. How does the proof work?

If we just set up the transition matrix as follows…

The first state (the absorbing state) represents Mark’s bankroll being zero (ruin).

Then the steady-state vector would be [1 0 0 0 …

Therefore, the probability of ruin is 1.

Quote:Quote: JoeshlabotnikDoes this also apply if the game is unfair? Let's say that Mark gets paid 6:5 when he wins but only pays Alice even money when she wins. Won't he eventually get ruined by Alice's infinite bankroll anyway? It seems like it should take longer than in a fair game, but wouldn't we be talking about infinity (fair game) compared to infinity times x (Mark's edge), which are equivalent since (infinity)(anything)=infinity?

Quote: djtehch34tNope, his winnings will drift towards the right infinitely, with some fixed probability of ruin (mostly in from the early steps of the process). Think of it like any positive expectation game... Your probability of ruin decreases as bankroll increases, so after sufficient time, this is negligible.

Just because infinity is involved doesn't mean it trumps everything. For instance, the probability of ruin could be geometrically decreasing in the number of rounds as Bob's bankroll increases in a +EV game, so, even when summed over infinite rounds the total risk of ruin is finite. If we were to do the calculation above for this sort of scenario, instead of the sum of contribution to the absorbing state tending to $1$, it would tend towards some small finite probability.

It does get a little paradoxical here, but the probability of ruin would still be 1. Changing the probabilities of the transient states in Mark’s favor (haha, is Mark for Markov?) will still produce the steady state vector of [1 0 0 …

Quote: JoeshlabotnikDoes this also apply if the game is unfair? Let's say that Mark gets paid 6:5 when he wins but only pays Alice even money when she wins. Won't he eventually get ruined by Alice's infinite bankroll anyway?

No.

Let p=Probability Mark wins even money.

Let b=Mark's initial bankroll.

Then probability of Mark's eventual ruin is [(1-p)/p]^b.

For example, Mark starts with a bankroll of 10 units and probability of winning each flip of 51%. The probability he is eventually ruined equals 67.03%. So, the probability he will never bust out, and keep growing his bankroll infinitely, is 32.97%.

Granted I changed the probability of winning to Mark an advantage, rather than the payoff, but the concept is the same.

Quote: WizardNo.

Let p=Probability Mark wins even money.

Let b=Mark's initial bankroll.

Then probability of Mark's eventual ruin is [(1-p)/p]^b.

For example, Mark starts with a bankroll of 10 units and probability of winning each flip of 51%. The probability he is eventually ruined equals 67.03%. So, the probability he will never bust out, and keep growing his bankroll infinitely, is 32.97%.

Granted I changed the probability of winning to Mark an advantage, rather than the payoff, but the concept is the same.

How does the formula change if there are odds? What if there are more than 1 outcome?

ie: Roll 1 die, if you roll a 1, 2, or 3, you lose $1. If you roll a 4, you push. Roll a 5 you win $2. Roll a 6 you win $3.

Quote: PeeMcGee

It does get a little paradoxical here, but the probability of ruin would still be 1. Changing the probabilities of the transient states in Mark’s favor (haha, is Mark for Markov?) will still produce the steady state vector of [1 0 0 …

As I alluded to in my first post, this sort of argument fails since we have a countably infinite state space. If this were not the case, the risk of ruin for any +EV game in the limit would be 100%... If that were the case, what would we all be doing on this forum :p?

You can calculate the actual probability of ruin for an unfair game in the limit using the formula given by the Wizard in his post.

Also, I noticed I switched Mark to Bob... Oops... I guess I only work with Alice and Bob.

Quote: WizardYes, he will eventually be ruined.

The paradoxical thing is that the expected number of flips to ruin is infinity, but the probability of it eventually happening is 100%. You would think if it must eventually happen the number of flips would be finite. Just one of many infinity paradoxes.

If there is an infinite number of trials, against the infinite bankroll, wouldn't one of them be the case where the player with the finite bankroll never loses a flip? Therefore, even with a finite number of trials (or even a single trial), the possibility of the "no loss" outcome cannot be discounted. If that possibility exists, how can the probability of ruin be 100% (rather than 99.9999999999999999999999999999999999999999999999999999~%)?

Quote: AyecarumbaIf there is an infinite number of trials, against the infinite bankroll, wouldn't one of them be the case where the player with the finite bankroll never loses a flip? Therefore, even with a finite number of trials (or even a single trial), the possibility of the "no loss" outcome cannot be discounted. If that possibility exists, how can the probability of ruin be 100% (rather than 99.9999999999999999999999999999999999999999999999999999~%)?

Though there are many ways to explain away this "paradox," the easiest way might be to ask: What's the difference between 100% and 99.999 repeating%? Alternatively, what if you want to write down the probability of the "no loss" outcome?

Quote: djtehch34tThough there are many ways to explain away this "paradox," the easiest way might be to ask: What's the difference between 100% and 99.999 repeating%?...

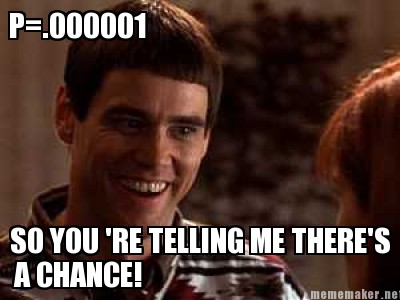

It is the difference between "Never" and "A Chance".

This topic (0.99 repeating = 1) was previously covered in another thread, but my question now is, at how many places of precision does 99.99~% become 100%?

Quote: djtehch34tAlternatively, what if you want to write down the probability of the "no loss" outcome?

It is the difference between a "1" at the end of a string of zeros (e.g., p=.0000000001), and a single zero (p=0).

Quote: Ayecarumba

It is the difference between "Never" and "A Chance".

I think this stems from a misunderstanding of the definition of "probability zero." When the space of outcomes is infinite, the intuitive thought that "probability zero" means "impossible" breaks down.

Quote: Ayecarumba

It is the difference between a "1" at the end of a string of zeros (e.g., p=.0000000001), and a single zero (p=0).

Begs the question, how many zeroes?

Quote: djtehch34tI think this stems from a misunderstanding of the definition of "probability zero." When the space of outcomes is infinite, the intuitive thought that "probability zero" means "impossible" breaks down.

I think I see what I am missing... By definition, the set of infinite outcomes includes events with p(0). Probability of zero is different than "impossible".

Quote: djtehch34tIBegs the question, how many zeroes?

I assume it doesn't matter. p(0) = p(0.0) = p(0.00) = p(0.000)... no?

It's when that pesky "1" is introduced at the end of the string of zeros, that things get interesting. How many zeroes before the "1" at the end doesn't matter?

Quote: Ayecarumba

It's when that pesky "1" is introduced at the end of the string of zeros, that things get interesting. How many zeroes before the "1" at the end doesn't matter?

Yes, the 1 makes it a different question :p. "Write an infinite number of zeroes and then put 1 after them" isn't really well defined. But, one could try to write it down as 1 - .999 repeating, which as we said earlier, is just 1 - 1 = 0.