Meanwhile, JB has been working on pai gow poker, and we hope to publish some ground-breaking stuff on that soon. That game involves combin(53,7)*combin(46,7) = 8,250,459,031,214,400 combinations, or 297 times as many as Heads Up Hold 'Em. JB wrote to me that he is using multi-core processing to cut down on the time it takes.

What is that, you might ask? Both my computers, and his, are actually four computers, or cores. So, it isn't a big sacrifice in speed to break the program into four parts, each doing 25% of the situations, and running them simultaneously. How do you do that? I wrote to JB to ask. I'm going to just copy and paste what he wrote below. So, give JB full credit for the following. I'm posting this for the edification of the forum, and to open to discussion if there might even be better ways.

Meanwhile, my Heads Up Hold 'Em results should results should be finished on Nov. 30.

****

First you should determine how many cores your CPU has, as this will tell you how many threads you should run. If you right-click an empty area of your taskbar, a menu should appear; click on Start Task Manager.

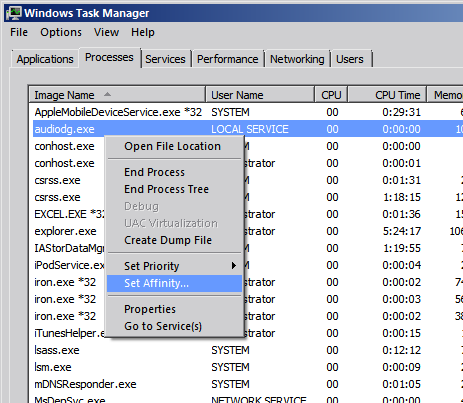

Go to the Processes tab, right-click any entry in the list, and choose "Set Affinity..." (if you get an Access Denied message, keep trying with different entries until it lets you)

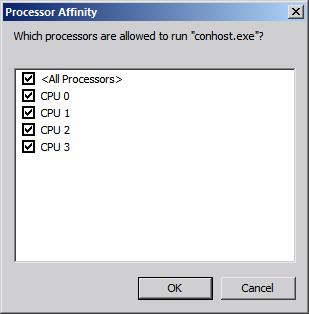

Something like this will appear, indicating how many cores your CPU has:

The above indicates that there are four cores.

Cancel that and then close the Task Manager.

Next you need to figure out how to go about splitting up the work among the cores you have available. I wrote a multi-threaded program with four threads, but learning how to make a multi-threaded program takes a lot of time to get right. If you've never done it before, then the easier approach would be to compile multiple versions of your program, with each version working on its own specific range of hands. Then start each separate version independently. Have them display the combination counts when they have finished running, and manually add them all together when all copies have finished.

Since you have two computers, figure out how many cores you have on each computer, and make that many copies. So if one computer has 2 cores and the other has 4 cores, divide the hands up into 6 and make 6 separate versions of your analyzer, with each one working on its own range of 1/6th of the hands. Launch each copy after all of them have been compiled. If the single program (before being split up) reads or writes to one or more files, you'll need to make sure that you either have each program work with its own set of files, or have them open the files in shared-access mode so that none of them conflict with each other.

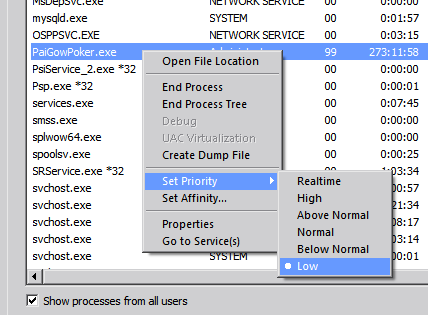

On the computer that you will be using for day-to-day stuff, after you have launched the programs, you should set them to run on low priority using the Task Manager -- right-click the taskbar and select Start Task Manager, then right-click on the program name, choose Set Priority, and select Low. It will confirm if you really want to change its priority; click Yes.

If you don't set them to run on low priority, your computer will become frustratingly sluggish when trying to do normal stuff like open a web browser.

An even more extreme solution is to use elastic cloud computing, where you basically use the approach Mike has described except you farm out several thousand virtual machines to do all the processing. Say, one for each starting hand. It costs money, of course. Depending on what plans you use and whether you pay up front or on an ad-hoc basis, you can rent an 8 core machine for between $0.50 - $10 / hr.

Divide up your work among enough of them, and even some of the most ludicrously complex games (double draw poker, anyone?) could be analyzed for a cost.

I have yet to explore the cloud solution in more detail other than Microsoft Azure lets you bill by the minute and Amazon's EC2 requires you to bill by the hour, and that there's a bit more overhead involved in setting up a common storage area to store the individual results and collate them. As well, how I would pass the parameter to each spawned instance isn't entirely obvious to me yet, besides entering them in manually and that might be problematic.

Then I went to a different system where I had a table designed to hold information about what hands were being worked on and another tracking the SPIDs that were doing the work. I could allocate work from what was next in the queue without having to reprogram and I could shut down a core without losing data. That way I could start and stop as many threads as I wanted to. In MS/SQL, each thread can be allocated an idle core. With 12 threads, I could crunch some numbers!

I found that the laws of diminishing returns would kick in when I reached 8 or 9 processes. MS/SQL uses one core to do its internal cleanup work and that work can't be divided. Once you max out the central clean-up core then adding more threads just makes the whole thing slow down. That's okay though, I still had two or three idle cores to use for other things.

The advantage of having a work allocation table is that, if you start a calculation thread and it is allocated to one of the cores that is already in use, you can stop it and kill the SPID and restart it to try to get an unallocated core. Because you stop it cleanly, you do not lose any information.

I hope that was readable.

Of course, processing power might catch up before it's worth doing it that way over using cloud sources.

Quote: thecesspitIt would suggest if there's a REALLY big problem that 6 cores for 4 weeks wouldn't do it, you crowd source the problem across many machines. Especially if the problem could be divided by starting hand, or distinct shuffles.

Of course, processing power might catch up before it's worth doing it that way over using cloud sources.

Cloud computing is so cheap and reliable right now, I doubt there could ever be advances in processor power that could compare.

Quote: WizardI'm in the process of analyzing Heads Up Hold 'Em. This is a Ultimate Texas Hold 'Em variant by Galaxy Gaming that was seen at G2E and Raving, and already spreading fast in Washington state. There are COMBIN(52,2)*COMBIN(50,3)*COMBIN(47,2)*COMBIN(45,2) = 27,813,810,024,000 pairings of seven-card hands to work through and score. Even with all the short cuts I put in, it will take my program three to four weeks to go through them all.

Meanwhile, JB has been working on pai gow poker, and we hope to publish some ground-breaking stuff on that soon. That game involves combin(53,7)*combin(46,7) = 8,250,459,031,214,400 combinations, or 297 times as many as Heads Up Hold 'Em. JB wrote to me that he is using multi-core processing to cut down on the time it takes.

What is that, you might ask? Both my computers, and his, are actually four computers, or cores. So, it isn't a big sacrifice in speed to break the program into four parts, each doing 25% of the situations, and running them simultaneously. How do you do that? I wrote to JB to ask. I'm going to just copy and paste what he wrote below. So, give JB full credit for the following. I'm posting this for the edification of the forum, and to open to discussion if there might even be better ways.

Meanwhile, my Heads Up Hold 'Em results should results should be finished on Nov. 30.

****

First you should determine how many cores your CPU has, as this will tell you how many threads you should run. If you right-click an empty area of your taskbar, a menu should appear; click on Start Task Manager.

Go to the Processes tab, right-click any entry in the list, and choose "Set Affinity..." (if you get an Access Denied message, keep trying with different entries until it lets you)

Something like this will appear, indicating how many cores your CPU has:

The above indicates that there are four cores.

Cancel that and then close the Task Manager.

Next you need to figure out how to go about splitting up the work among the cores you have available. I wrote a multi-threaded program with four threads, but learning how to make a multi-threaded program takes a lot of time to get right. If you've never done it before, then the easier approach would be to compile multiple versions of your program, with each version working on its own specific range of hands. Then start each separate version independently. Have them display the combination counts when they have finished running, and manually add them all together when all copies have finished.

Since you have two computers, figure out how many cores you have on each computer, and make that many copies. So if one computer has 2 cores and the other has 4 cores, divide the hands up into 6 and make 6 separate versions of your analyzer, with each one working on its own range of 1/6th of the hands. Launch each copy after all of them have been compiled. If the single program (before being split up) reads or writes to one or more files, you'll need to make sure that you either have each program work with its own set of files, or have them open the files in shared-access mode so that none of them conflict with each other.

On the computer that you will be using for day-to-day stuff, after you have launched the programs, you should set them to run on low priority using the Task Manager -- right-click the taskbar and select Start Task Manager, then right-click on the program name, choose Set Priority, and select Low. It will confirm if you really want to change its priority; click Yes.

If you don't set them to run on low priority, your computer will become frustratingly sluggish when trying to do normal stuff like open a web browser.

This is great information for me, thanks! I was able to solve a couple of long-running irritating problems using your methods on cleaning up processes and priorities, and I REALLY appreciate it!

I also compile with the -Ofast flag under gcc or g++. You might consider optimization flags in your own compilation.

You can see in the image that I am about 864 minutes into the computation. This is equivalent to 13.8 days to run the cycle on a single processor.

Start here: http://w8isms.blogspot.com/2013/04/gpgpu-performance-tests.html?m=1Quote: NareedIf your PC has a high end video card, you can use its CPU to run other programs as well (assuming you're not taxing it by running graphic-intensive programs at the same time). I've no idea how to do this, but programs like BOINC use it up (and made my video card crash, too).

Even a 2-3 year old discrete GPU has massive parallel capacity compared to a new x86 chip. They were built to handle parallel numeric computations, and that's exactly what large poker analyses are. Anyone with an upgradeable desktop can often plug in a discrete GPU and significantly increase custom analysis code performance. If you do a lot of brute force looping, it could be a very worthwhile $300 or so.

Quote: CRMousseauCloud computing is so cheap and reliable right now, I doubt there could ever be advances in processor power that could compare.

How does one go about purchasing time on the cloud? I might have analyzed Lunar Poker years ago if I knew about this option.

The Pai Gow Poker analyzer I have running is a single program which spawned four threads, each thread running on its own core. I would also claim that it is effectively analyzing combin(53,7)*combin(46,7)*combin(7,2) = 173,259,639,655,502,400 (173.26 quadrillion) scenarios, because the 21 ways to set each hand need to be analyzed to determine the best play. However, with all of the shortcuts I have discovered and/or implemented, it is actually only analyzing 3,245,840,433,079,200 (3.2 quadrillion) scenarios which, while still massive, is a reduction of just over 98%.

I stopped and restarted it several times (it resumes where it left off whenever it is restarted) after coming up with new ways to optimize the code inside the major bottleneck. I'm fairly convinced that I cannot optimize the code any further.

As of right now it is 22.4% complete, and analyzing at an average rate of 2.3 hands per second (0.58 hands per second per core). If this rate is maintained, the program should finish in about 4 weeks, although an unknown amount of additional time will be needed to study the results when it has finished.

Quote: WizardGreat comments so far!

How does one go about purchasing time on the cloud? I might have analyzed Lunar Poker years ago if I knew about this option.

The one that I've looked into is Amazon EC2. They have a good amount of info on their website, and they give you a certain amount of free computing, so you can get your feet wet. They also have an option where you can bid on computing time, but they can cancel your process as soon as your bid amount is lower than the current rate; if you go this way, you can save money, but you need to have processes that can be interrupted.

Quote: CrystalMathThe one that I've looked into is Amazon EC2. They have a good amount of info on their website, and they give you a certain amount of free computing, so you can get your feet wet. They also have an option where you can bid on computing time, but they can cancel your process as soon as your bid amount is lower than the current rate; if you go this way, you can save money, but you need to have processes that can be interrupted.

My basic problem with Amazon EC2 that I can see is that they bill by the full hour, even for a one minute run. This is entirely unconscionable to me. Not often I'll say I find Microsoft's practice the less repugnant of the two, but they bill by the minute.

In my case, the shared resources are in memory and don't create much of a bottleneck until you start that ninth or tenth process. Programming for a cloud seems to me a bit of a challenge since units of work can get lost and you have to keep track of which ones need to be resent. That in itself may become unmanageable if you require a table with all possible combinations and a status for each one.Quote: socksHow often is the bottleneck a single large LUT? Are there any cases where the cloud doesn't offer a lot of advantage over a multiprocessor machine because all your processes want to share the one look up table? This seems especially possible with cases where the primary LUT might have some depth (BJ variants?) but can be pruned while building it by keeping things ordered, which would make it harder to break up. Unfortunately, I haven't quite gotten to the point myself to experiment here.

I tried starting a computation status for my poker variant (Cameltoe Poker) but when the table got to 6 gigs, I thought better of it. But I'm not sure how you would break your process up for the cloud without a status table for each unit of work unless you make each unit of work rather large. A programming challenge that I'm not up to (for free).

Quote: s2dbakerIn my case, the shared resources are in memory and don't create much of a bottleneck until you start that ninth or tenth process. Programming for a cloud seems to me a bit of a challenge since units of work can get lost and you have to keep track of which ones need to be resent. That in itself may become unmanageable if you require a table with all possible combinations and a status for each one.

Thanks for the thoughts. I'm presently using a recursive algorithm which, of course, trades speed for expressiveness, so I think the management overhead may not be that complicated if the problem is fundamentally easy to break up. Then, hopefully, I can make that speed back up on the other side. I glanced back over Nairn's BJ paper and he used "a 32-processor cluster by partitioning into different up cards and different splitting pairs.", which doesn't sound that bad.

That said, I've been thinking though that something like a 12 core Mac Pro may end up being better than the cloud for some calculations depending on where the bottleneck is and whether you can make good use of large tables. I'm new to the cloud, but I believe Heroku's big dyno's are 2G now and that sounds smallish to me, for the tools I'm using. I haven't checked out the competition though. I believe the new mac pro also has a fat 30MB cache that might provide some additional speed (if I change out my data structures). Oh well, I should stop fantasizing about speed and get back to it.